Varnish + Umbraco

Disclaimer

I am no Varnish Guru and only really using this for fun and learning.

So please take anything here with a grain of salt and maybe consult with someone who actually knows Varnish before using anything here.

Why do this?

So I've long been super focused on having absolute minimal downtime with my Umbraco platforms and making them as stable as possible.

I also can't stop myself from being curious about these technologies 🙂

To this end I've explored the vast features of Umbraco and it's built in support for loadbalancing.

These features works pretty good, but requires very careful development and architecture of the platform to properly support this.

Any misconfiguration can lead to very serious issues or unintended behavior that is remarkably hard to track down.

This along with other problems I've encountered when building scalable Umbraco instances lead me to the magic of frontend caches like Cloudflare, Azure Frontdoor and Varnish.

These caching solution are easily scalable and can technically be dropped in on top of an existing codebase.

Note: Any use of cookies will however break the Varnish caching if not configured.

These drop-in solutions are a perfect way to slap a little extra stability, and complexity, onto a project to hit the zero downtime deployment milestone.

Important notice!

THIS WILL NOT AND SHOULD NOT BE USED FOR THE BACKOFFICE!

Only apply this in a setup where you can reliably split traffic for backend and frontend requests.

How to implement this

Adding Varnish to an Umbraco platform requires very little for the most basic setup.

However, since I used this for my website project, I'll be sharing how it is implemented for that.

This adds a few extra steps, but I most of it is pretty easily translated to other services or removable if unnecessary.

Varnish

Configuring Varnish is done using the Varnish Configuration Language(VCL).

Below you'll find a basic example that can serve clients up to 24 after the backend is marked unhealthy.

# default.vcl

vcl 4.1;

import std;

backend default {

.host = "umbraco";

.port = "8080";

# If 3 out of the last 5 polls succeeded the backend is considered healthy

.probe = {

.url = "/healthz";

.timeout = 1s;

.interval = 5s;

.window = 5;

.threshold = 3;

}

}

sub vcl_recv {

# Short grace when backend is healthy

if (std.healthy(req.backend_hint)) {

set req.grace = 10s;

}

# Ignore cookies for caching purposes

unset req.http.Cookie;

}

sub vcl_backend_response {

if (beresp.status >= 500 && bereq.is_bgfetch) {

return (abandon);

}

# Long grace when backend is unhealthy

# This serves content from cache for longer when the backend is unhealthy

set beresp.grace = 24h;

# How long to cache content

set beresp.ttl = 5m;

if (beresp.http.Set-Cookie) {

unset beresp.http.Set-Cookie;

}

if (beresp.http.Cache-Control) {

unset beresp.http.Cache-Control;

}

if (beresp.http.Expires) {

unset beresp.http.Expires;

}

}

# For debugging

#sub vcl_deliver {

# if (obj.hits > 0) {

# set resp.http.X-Cache = "HIT";

# } else {

# set resp.http.X-Cache = "MISS";

# }

#}

Note that ALL cookie headers are stripped, so any reliance on these will cause conflicts and problems.

For more information on the problem with cookies and caching, see the Varnish Docs:

https://www.varnish-software.com/developers/tutorials/removing-cookies-varnish/

This configuration will work for most Umbraco platforms running in Docker or similar container setups.

If you wish to use this outside Docker, then adjust the configuration accordingly.

Doing this should mainly require you to change the .host and the .port for the backend.

Any other configuration is advanced and you're on your own then ✌️

Lacking from this is ANY programmatic cache invalidation.

This is not something I have implemented yet and therefore can't share any code examples.

I'm pretty confident this can done using Umbraco Webhooks or Notifications to trigger a BAN for a specific page.

Updating generic/re-used HTML code is also not a feature I have looked at yet.

This can be particularly problematic when changes happens to a sites Footer or Header, which means all pages should be invalidated.

Varnish has a book and some pretty Okay docs that you might want to checkout before diving deeper into the VCL config.

By then you might even become a Guru.

Umbraco

Very little is actually required to be configured in Umbraco fro this setup to work.

One vital configuration is to set Varnish as a trusted proxy, either with a network allow or host allow.

This is because headers and other important information is parsed from the Varnish requests.

To do this safely, we must declare the Varnish proxy as trusted.

This can be done using the following code for a Docker network:

// Program.cs

using System.Net;

using Microsoft.AspNetCore.HttpOverrides;

using IPNetwork = Microsoft.AspNetCore.HttpOverrides.IPNetwork;

// The rest of the code in your Program.cs

// https://github.com/umbraco/Umbraco-CMS/issues/16741

// Forward headers needed to pass information from the reverse proxy to the application

builder.Services.Configure<ForwardedHeadersOptions>(options =>

{

options.ForwardedHeaders =

ForwardedHeaders.XForwardedFor |

ForwardedHeaders.XForwardedProto |

ForwardedHeaders.XForwardedHost;

options.KnownNetworks.Add(new IPNetwork(IPAddress.Parse("172.28.0.0"), 16));

});

// The rest of the code in your Program.cs

var app = builder.Build();

await app.BootUmbracoAsync();

app.UseForwardedHeaders();

UPDATE - 04-01-2025:

As of Umbraco 17 or .NET 10, the example above is using obsolete code.

The following line should be updated:

// -- options.KnownNetworks.Add(new IPNetwork(IPAddress.Parse("172.28.0.0"), 16)); // .NET 9 or lower

// ++ options.KnownIPNetworks.Add(System.Net.IPNetwork(System.Net.IPAddress.Parse("172.28.0.0"), 16)); // .NET 10+I'm not going to dive too much into what these settings do but the TL;DR is:

ForwardedHeaders are what's used instead of the normal direct value, eg. XForwardedProto, which indicates which HTTP protocol was used by a client.

KnownNetworks is used to inform Umbraco that this segment is trusted, IP's not in this CIDR range will not work.

The alternative is to allow each hosts by IP specifically.

Additional information on proxy management in ASP.NET is available in the Microsoft Docs:

https://learn.microsoft.com/en-us/aspnet/core/host-and-deploy/proxy-load-balancer

Now to ensure that Varnish can check the health of the backend, I highly recommend adding a health check endpoint.

It can be a basic check like the example below:

// Program.cs

app.MapGet("/healthz", () => Results.Ok("Healthy"));Or it can be a sophisticated check that validates many parts of your platform.

Why not just ping the frontpage you might ask.

Well, as I learned the hard way just pinging the frontpage of an Umbraco platform can have many unwanted side effects.

Noticeably these things was a big problem for me:

Assets on the frontpage resolved to a 404

This caused Varnish to mark the backend as down, even if it OK

Umbraco 404 and 500 error pages actually produces a 200OK response

This is expected as the browser etc. would otherwise mark the whole server as down

Varnish will of course happily process AND CACHE requests to these pages 😅

So to make life easier and following best practice from the Varnish people, we use a health endpoint.

Traefik

Let me start by saying that you can absolutely just use Varnish as your entrypoint/primary proxy.

However, that was a bit too advanced for me, so I opted for Traefik which I know and like.

You might have to know a little about Docker and Traefik for this part, but it should be mostly plug-n-play.

Building a Varnish container can be done using Docker compose and pointing the container to the VCL file from the previous step.

# Dockerfile

FROM docker.io/varnish:fresh-alpine AS varnish

COPY Varnish/default.vcl /etc/varnish/

The accompanying compose file for the Docker file:

# docker-compose.yaml

services:

traefik:

image: traefik:v3

container_name: traefik

hostname: traefik

command:

#- "--api.insecure=true" # Enable dashboard on :8080

- "--providers.docker=true"

- "--providers.docker.exposedbydefault=false"

- "--entrypoints.web.address=:80"

- "--entrypoints.websecure.address=:443"

ports:

- "80:80"

- "443:443"

- "8081:8080" # Traefik dashboard

volumes:

- /var/run/docker.sock:/var/run/docker.sock:ro

networks:

- umbnet

restart: unless-stopped

varnish:

image: varnish:stable

container_name: Varnish

hostname: varnish

build:

target: varnish

context: ./src

tmpfs:

- /var/lib/varnish/varnishd:exec

environment:

- VARNISH_SIZE=8G

networks:

- umbnet

restart: unless-stopped

ulimits:

memlock:

soft: -1

hard: -1

labels:

- "traefik.enable=true"

- "traefik.http.routers.varnish.rule=Host(`${FRONTEND_URL:-umbraco.local}`)"

- "traefik.http.routers.varnish.entrypoints=web,websecure"

- "traefik.http.routers.varnish.tls=true"

- "traefik.http.services.varnish.loadbalancer.server.port=80"

networks:

umbnet:

driver: bridge

ipam:

config:

- subnet: 172.28.0.0/16

You should adjust some setting for you own setup, like the build: context: ./src so that it point to the correct file location for the Dockerfile.

For local development etc. you can also add Umbraco to the services section to check how Varnish work and do some development on the VCL.

An important part of the config is the networks section as it defines the same network as we use in our program.cs file.

If you changed that then this subnet should also be adjusted.

Note: This config does NOT work with IPv6.

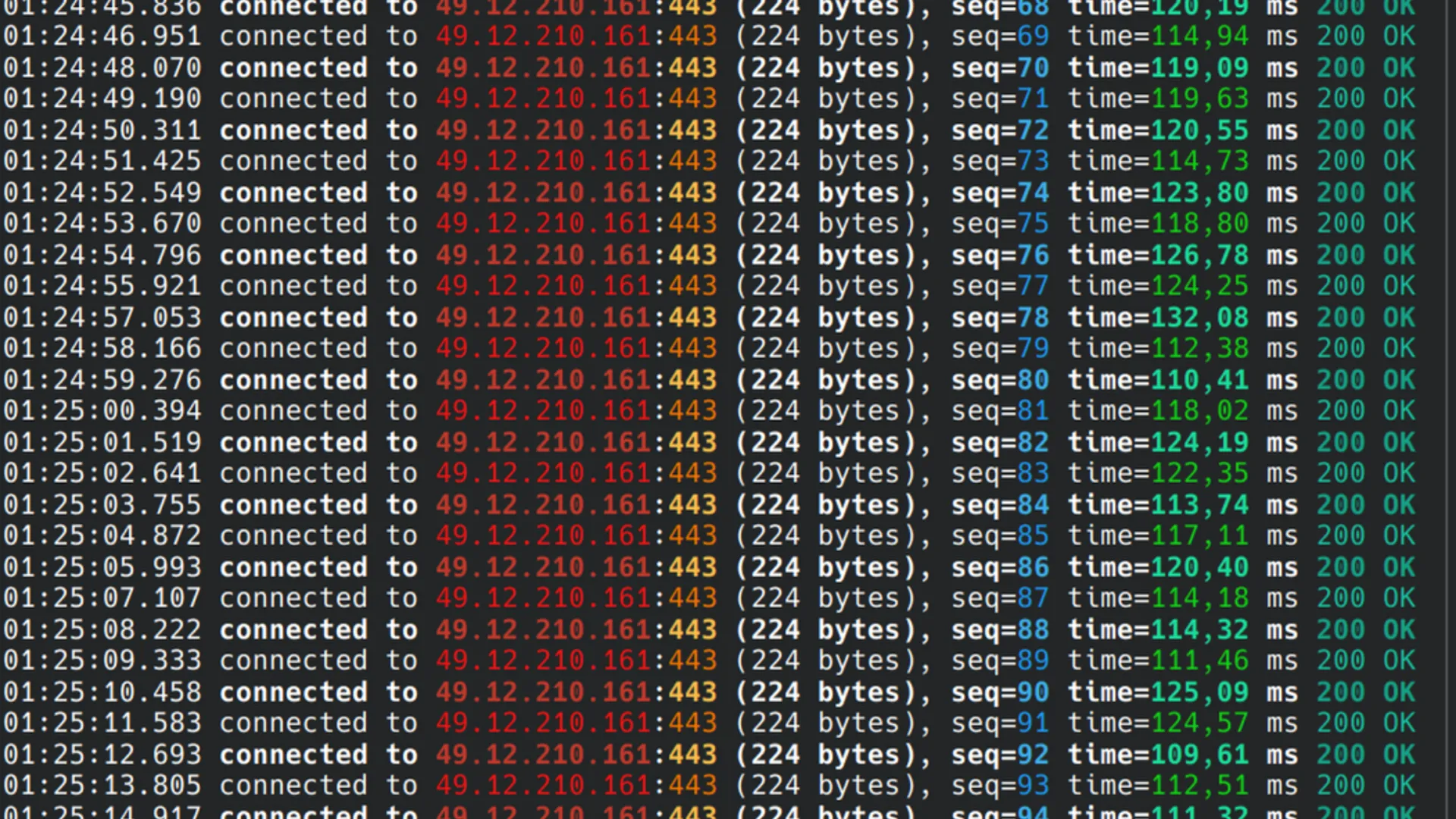

The results - With Varnish

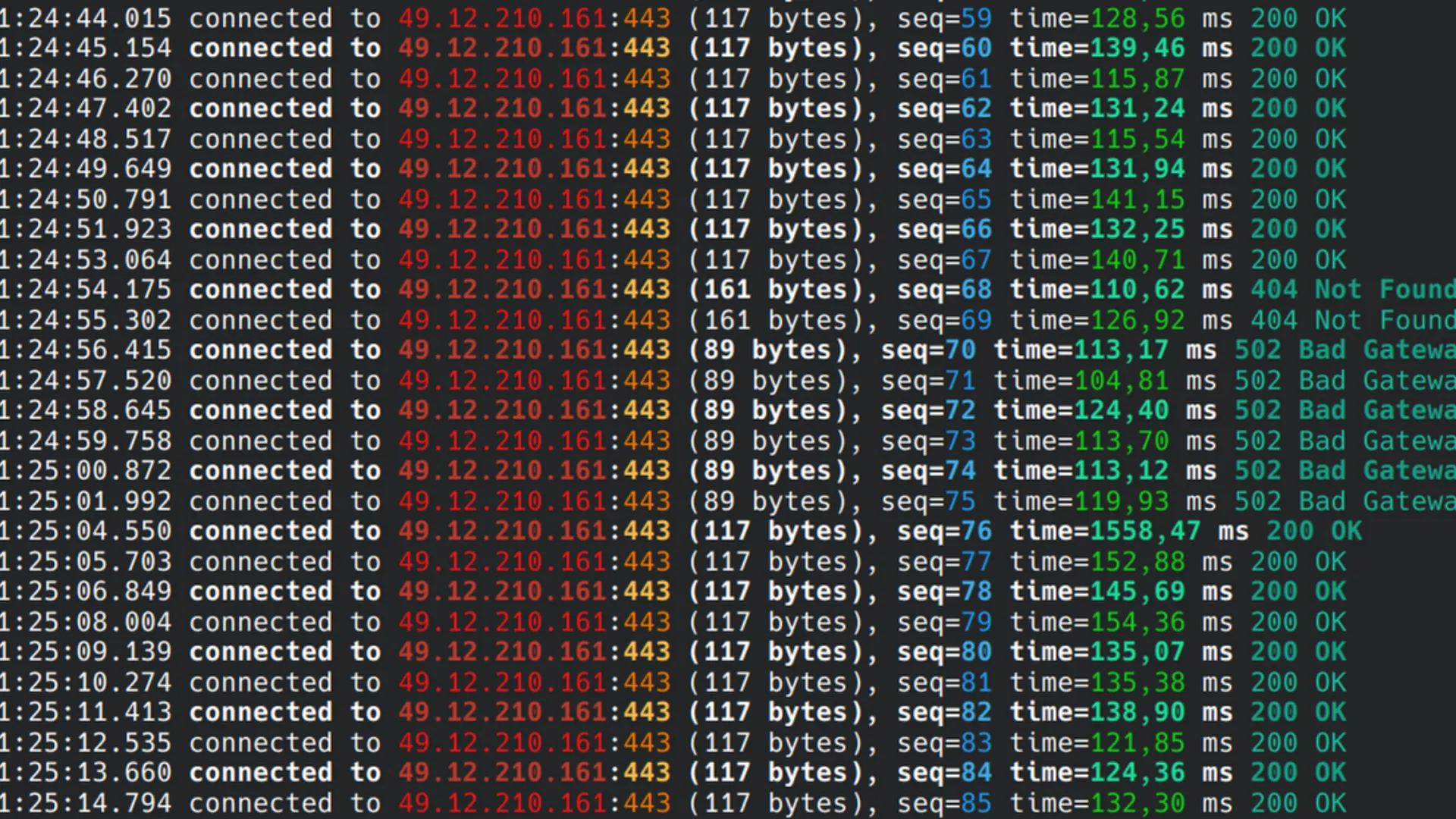

The results - No Varnish

Rounding up

The amount of config and potential gains from adding Varnish is really awesome, but comes with some major downsides.

No cookies is a real downer and would likely be a show stopper for many people.

It can be configured but it quickly becomes quite the hassle.

For projects where this can be applied the gains are in my opinion worth the technical overhead.

You can make deployments without worrying about affecting the end users.

Scaling Varnish is much easier then scaling Umbraco.

Placing everything inside a single docker-compose.yaml is a big win for me in managing my infrastructure.

As shown on the image illustration above, we can achieve 0-downtime deployment for end users.

This is with a very limited amount of resources and using simple configuration settings.